Google’s ATAP division already became known, after the news on the Tango project emerged in the press. Things moved fast and just a few weeks later, I had the chance to challenge the hardware capabilities of the Tango device while participating to a Hackathon that was held last during the last weekend, in Timisoara. Me and my team, the “Legendary coders”, were announced as winners, so I’ll use this opportunity to show you what our project was about, and some thoughts and impressions on this exciting event.

A menu to help you navigate

Short Intro

Event pictures

Our project, the Camera 3D

Google Tango superframe

Camera 3D presentation video

Short intro

I got to the Startup Hub Timisoara Friday evening, missing the initial presentation that was held earlier. My colleagues were already there, with many other mobile developers and enthusiasts. They’ve shown me the Tango device with some exciting details on what it does. Mihai Pora, a Google engineer in Krakow, Poland, brought a dozen of these.

If you want to see the internals and more on the hardware, there’s a tear-down article here, with some high res photos.

That evening I’ve noticed the device we had got hot quite fast, making testing more difficult but the next day we got a better device and the problem was no more. This new one performed much better and there were no other stability issues, so the development continued as planned. Everybody started focusing on exciting projects, and I loved the geeky feeling this Hackathon was generating already.

Our project, the Camera 3D

The first thing to do was to build a list of ideas. I was tempted to bring in some additional gear like one of my robots, and maybe have them equipped with all kinds of sensors including nuclear radiation detectors, and use a Tango device as central processing unit, as well as taking advantage of its sensors to make the robot find its way and maybe do a mapping of the space around, including the values of the parameters measured (radiation heat map, temperature, air quality, etc). Must admit that the Tango device makes a nice addition to any robotics related application. Then we came to the conclusion that regardless of its many sensors , a phone remains a phone, and we don’t want to run to catch the robot while someone is calling our mobile.

Getting back to phone-related applications we also had the idea to create a cooperative 3D painting app where a group of people draws in the air and the Tango Device sees that action and records the entire digital painting in 3D. Here the problem was it’s getting hard to keep track with the entire 3D drawing from a certain point, as not all of the users would see the Tango’s screen where a preview is available.

Finally another idea was to build an app for blind people, to allow them to navigate freely by making the Tango see for them, and pass in some audio indications, including on faces of people arround. But considering the limited time available for the Hackathon project, we opted for a more doable task, while trying to remain in the phone apps area. Even so, like it happens every time in Software, there were many hidden obstacles and the time so short, making the whole experience both exciting and very challenging.

The idea we opted for, was building a 3D camera application, that would take a picture in an instant , but then build a 3D model using OpenGL and the recorded depth sensor data. By doing so we get to see more details and our picture become closer to reality. Things we would never see in a plain regular picture, get recorded and we can zoom or rotate our 3D model to inspect everything in detail.

Google Tango superframe

As a team we all got a task assigned, in order to cover the requirements in the most efficient way. One thing we needed was the depth data and use it to build a 3D heightmap with OpenGL. My task was to access the Superframe data, and grab the depth information. I have some previous experience on data structures, so for me this was a nice thing to do. We also got some help from some nice people there at the hackathon. The specs show the superframe is a block of concatenated data. We get this buffer in onPreviewFrame like with regular camera frames. The size in current firmware is 2242560 bytes.

@Override

public void onPreviewFrame(byte[] data, Camera cameraPreFrame) {

// Get camera parameters

Camera.Parameters parameters = mCamera.getParameters();

int h = parameters.getPreviewSize().height,

w = parameters.getPreviewSize().width;

// Feed to our listener

if (listener != null) listener.onPreviewFrame(data, w, h);

// Get data buffers from the SUPERFRAME

depth = new BufferDepth(data);

smallRgb = new BufferSmallRgb(data);

bigRgb = new BufferBigRgb(data);

if (Constants.DEBUG) {

Utils.saveDatatoFile(depth.getData(), 0, depth.getSize(), "/sdcard/buf-depth.raw");

Utils.saveDatatoFile(smallRgb.getData(), 0, smallRgb.getSize(), "/sdcard/buf-smallrgb.raw");

Utils.saveDatatoFile(bigRgb.getData(), 0, bigRgb.getSize(), "/sdcard/buf-bigrgb.raw");

}

At this point all relevant data is extracted conveniently in the three buffers, depth, smallRgb and bigRgb. Depth is what we’re looking for, and contains a 320×180 16bpp pixels image. The smallRGB is the image captured by the fisheye camera: 640×480 pixels in size, while the BigRGB is the color, high resolution, 1280×720 image. The part of the Camera 3D project that extracts the data, is available here .

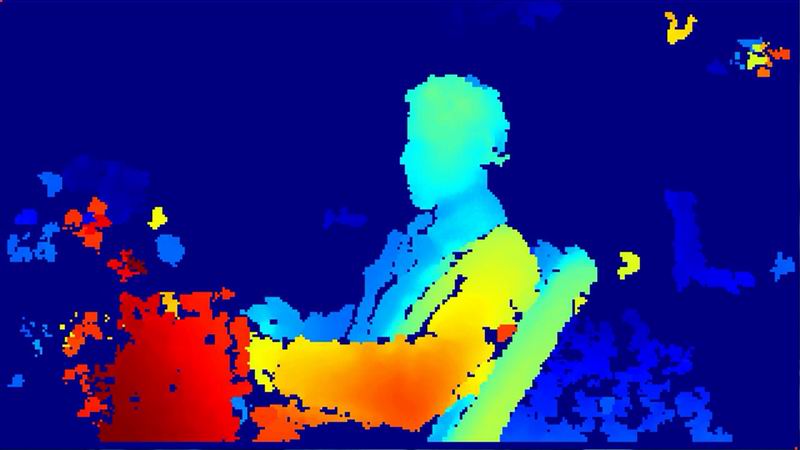

The depth buffer comes as a 115200byte segment. Using any graphics processing software, we can see the actual image, as depth information in grayscale colors:

The amazing thing about the Tango device is that the grayscale image is actually correlated to real world distances. For each pixel we know the exact distance from the camera to that particular location, in milimeters! This surely makes Tango a great device. To get the distance from the depth buffer, try something like :

for (int bitmapIndex = 0; bitmapIndex < depth.getImgSize(); bitmapIndex++ ) {

// Depth is contained in two bytes, convert bytes to an Int.

int pixDepthMm = ((((int) depth.getData()[2* bitmapIndex + 1]) << 8) & 0xff00) |

(((int) depth.getData()[2* bitmapIndex]) & 0x00ff);

int pdx = bitmapIndex % depth.getWidth() ,

pdy = bitmapIndex / depth.getWidth();

}

}

A few examples that are actually dump files from my tests, can be see here: depth-buffers.

The other buffers can be used in a similar way. Here is the smallRgb buffer (307200 bytes) , decoded as a 640x480 8bpp bitmap:

The example of the fisheye buffer is available here: fisheye.

There were some issues decoding the color high resolution image (encoded as YUV420) due to lake of time. In the little time we only managed to get the depth data and plot it as a heigtmap in open GL, but the results were impressive already. We used OpenCV to find the face of the subject, and use it to know the exact distance from our phone to the subject to do a better 3D representation and exclude irrelevant data. This worked fine, but there was no time for some important calibrations. Maybe I'll find the time and continue the work on the Camera 3D app:

The pics show the depth data buffer and the result , nicely rendered with OpenGL. The camera preview image shows the way we detected the face, and the distance information nicely computed and displayed under the green rectangle highlighting the subject's face.

Our issues were related more to the OpenGL and very little to the Tango which did good and made our work easy. The problem was that we had little time for a proper heightmap OpenGL implementation, and in our app, the heightmap was a square, cropping from the 320x180 depth image. This is easily fixable and as I said I might do that at a later time.

Camera 3D presentation video

We were announced as Winners, so the "Legendary coders" were happy to see their hard work appreciated.

There were many interesting projects developed during this short time, and you can read more on them here. Alex Palcuie was also a participant to the Hackathon, but he sacrificed some considerable part of his time documenting this event way better then I did, so make sure you checkout his blog post. All in one this was a great event, that brought a lot of enthusiasm over this neat piece of innovation from Google, and we're all excited to see what comes next. I hope we will see more events like this one, challenging for our creativity and a good motivation to push the boundaries of technology to new exciting projects.

You might also be interested in the Project Tango Developers Community on G+ .